CodingBeats

creating music through code

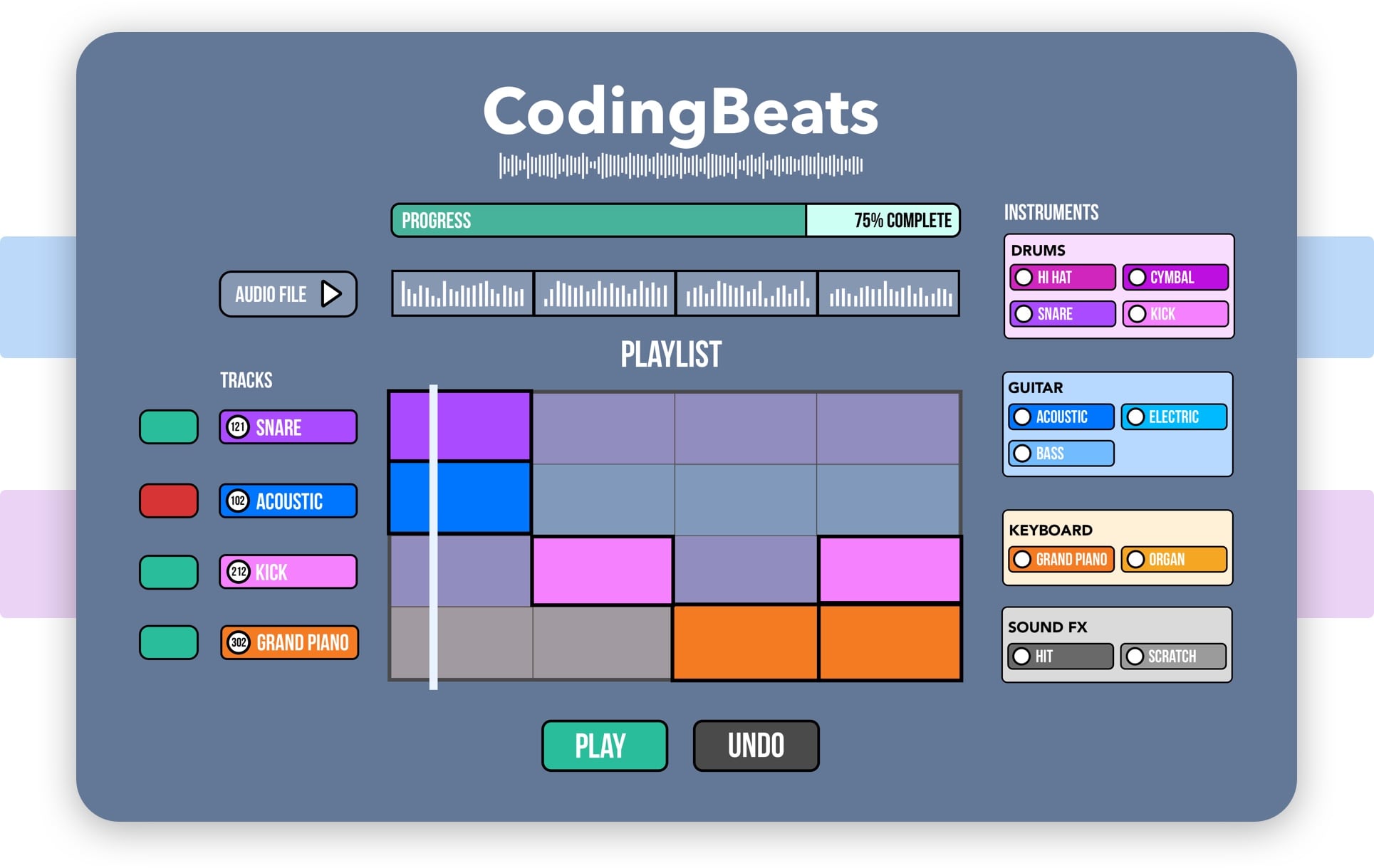

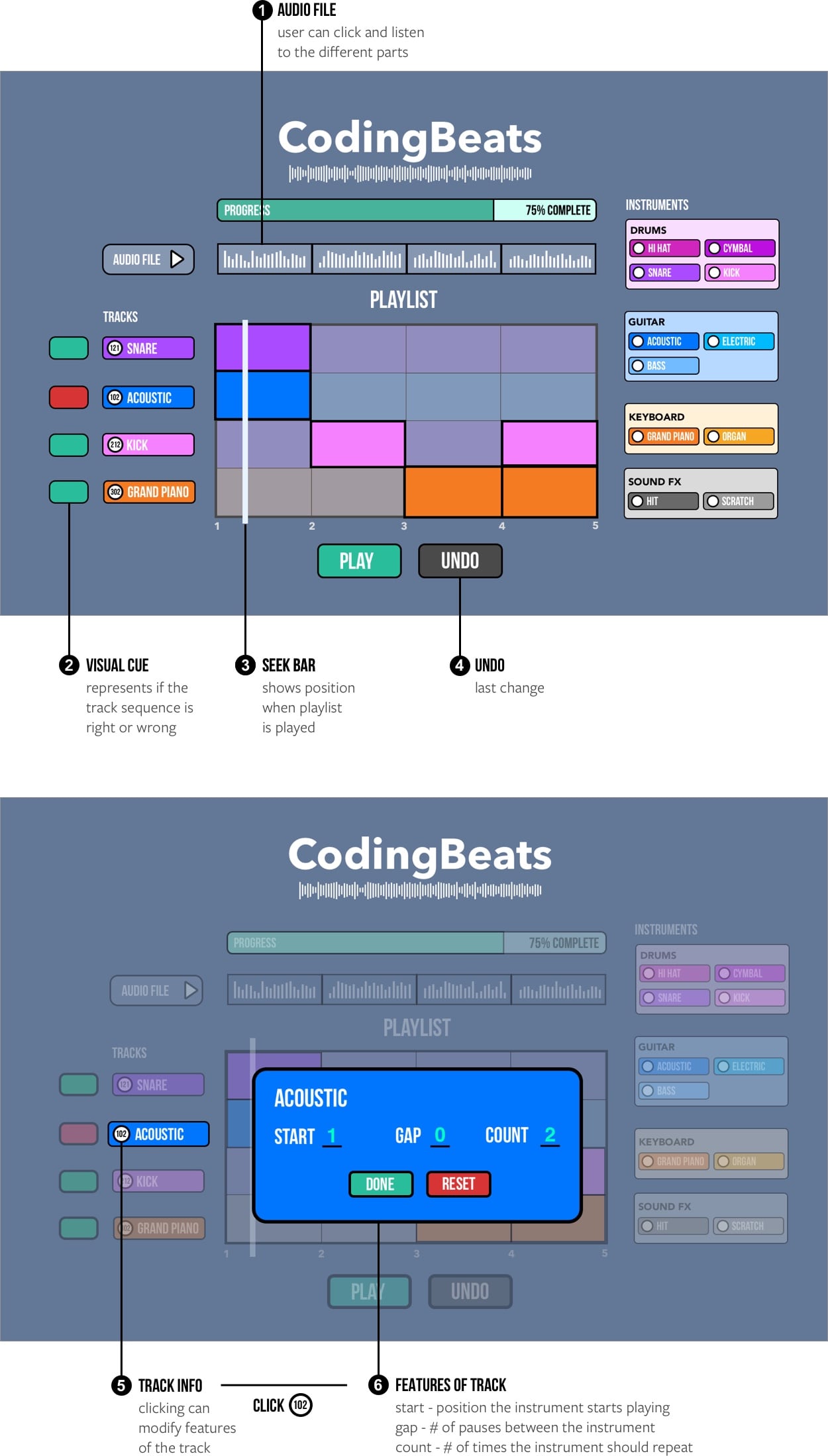

This is an interactive experience made for people who have little to no coding experience to create music simply by interacting with the application.

- Date March 2017

- Duration 2 months

- Type Team Project

- Role Design, Code, Scrum Master

A brief overview

CodingBeats is an application we built to help novice users to create music through code. Through this project we aim to obtain a deep understanding the HCI Guidelines, evaluate systems by conducting usability studies and finally comparing it against an established product in the same field.

Current Problem

Existing solutions to create music through code aren’t very intuitive, are overwhelming, and have a steep learning curve.

We have learnt that when users use an interface akin to this application, it is normally in an exploratory fashion and with limited feedback they lose interest quickly.

User Research

Focus Group

We conducted user research to understand the requirements and identify opportunities for our application.

To begin with, we conducted a small focus group with seven undergraduate/graduate students from

the University of Florida.

A summarised version of our conversation with them has been added to this section.

Summary

1. Age Range?

21 - 30

2. Frustrations when learning language?

Learning syntax, organizing code, logistics and debugging

3. Resources to ease this process?

Documentation, Tutorials

4. How did you learn an instrument?

Instructors, Youtube

5. Experiences while digitally creating music?

Tutorials, constant feedback helped

What we learnt

Our users reported favoring hands-on approach to learning, well-calibrated challenges, an intuitive and easy-to-learn interface, and informative feedback.

- Importance of goals for both motivation and engagement

- Need for quality feedback

- Need for readily-available information

What we decided

We propose an interface that is a simplified form of music production and features instruments common in the current generation.

A highly visual interface featuring direct manipulation will lower the learning curve. Timely, easily-interpreted feedback will be provided both automatically.

Hypothesis

Our hypotheses are based on comparison with the existing solution EarSketch.

H1 Our primary hypothesis is that users will report higher motivation for CodingBeats than EarSketch.

Motivation was a fair measure on which to compare the two systems because generating music computationally was a common task at the core of each system despite different capabilities

H2 the Perceived Competence subscale scores of the Intrinsic Motivation Inventory (IMI) Questionnaire will be higher for CodingBeats than EarSketch

H3 the Pressure/Tension subscales scores of the IMI Questionnaire will be higher for EarSketch than CodingBeats.

Weekly Sprint Feedback

We iteratively designed and built the application, conducting weekly tests for five weeks, each week focusing on a different form of testing.

Week 1 / Sketch

Participant was asked to evaluate the design through a series of questions.

1. What they disliked about the interface

Confused about progress measurement. Lack of exit and help, meaning of “gap” made app

unclear.

2. What they liked

Color scheme, visual consistency was found to be favorable.

Based on the sprint feedback we retained the color scheme, the buttons were made more

visually distinct and clickable

Week 3 / Think Aloud

Participant was given two simple tasks for which they had to talk through while performing it.

1. Asked to state interpretation of the music-making terminology used

Responded favorably the terms used.

2. To describe any expectations that were not met.

Pointed out alignment problems with grid. Introduction screen was necessary.

Feedback enforced confidence about maintaining our interaction techniques. Created 2

help screens, with labeled functionality. Fixed alignment issue.

Week 2 / Face Validity

Participant was to completed 3 basic tasks - click on everything, drag the track labels, and open the track menus.

1. Asked for her impressions

Confused about function of track buttons.

2. How well the app has covered its goals.

Integration of music and computational thinking felt natural but simple, promising as

target audience was beginners.

Confusion about the function of the track menu buttons led us to change their dimensions

and add visual feedback to indicate their clickability.

Week 4 / Try to Break It

Participants asked to perform task of moderate complexity while deliberately attempting to break the interface and fill out the SUS Test.

Create a loop using 3 instruments.

Main error, clicking “Go Back” erased all the work. Dragged labels would be hidden behind

grid while moving.

We now save the user’s work when they navigate away, track’s configuration options now display blank text boxes. Average

SUS score was 70.

Week 5 / User-Needs Evaluation

Three participants asked to install the application, play with it and assign a score for it.

The participants were asked to play with the app and rate it from 1 to 10.

The average score we got for the CodingBeats app was 8.

Promising, suggesting gamification of the task made it more satisfying for beginning

users.

HCI Guidelines Followed

Shneiderman’s Eight Golden Rules of Interface Design were followed during the designing of CodingBeats.

Shneiderman proposed this collection of principles that are derived heuristically from experience and applicable in most interactive systems after being properly refined, extended, and interpreted.

Strive For Consistency

Terminology is consistent throughout the interface • The grid displays an instrument’s beats in that instrument label’s colors • Next and Go Back buttons are in the same position for each screen, so users need not move the mouse when navigating quickly.

Seek Universal Usability

Novice users can use the app to create original compositions without worrying about game objectives. • Seasoned users can accept the challenge to play the game and try to recreate the audio file, or to try and create an even more sophisticated track.

Offer Informative Feedback

Clicking instrument buttons plays an instrument’s sound. • Drag-and-drop features a “locking” animation into the slot. • Correct moves will increment the progress bar, track menu button colors reflect each track’s progress toward solution.

Design Dialogs To Yield Closure

The track menu offers both “Done” and “Cancel” buttons, so if the user feels uncertain about their changes they can opt not to save them.

Prevent Errors

Track labels return to the right hand list if they are dragged and dropped anywhere but near/on a track label slot. • Track menu input is only saved when valid numbers are input.

Permit Easy Reversal of Actions

The track menu’s “cancel” button allows user to undo configurations they have made. • Track labels are easily swapped with drag and drop.

Keep Users In Control

User directly manipulates track labels by dragging them to slots and can configure each track using three different parameters.

Reduce Short-Term Memory Load

Lower-right corner button allows quick toggle between main screen and help screen views for reference. • Clicking track labels always plays a sample of the instrument’s sound. • Sections are labeled to help user comprehend layout

User Study

Population: Adults (Aged 18+) with limited to no experience generating music computationally

Total Number of Participants: 38

Condition One: EarSketch (Existing System) N = 19

Condition Two: CodingBeats (New System) N = 19

Procedure

The task while conducting the study was for the students to use either CodingBeats or EarSketch (a competing product)

Post - task we conducted a survey obtaining the Demographic Information and asking the students to fill a Intrinsic Motivation Inventory (IMI) Questionnaires

The IMI was used to assess participants’ subjective experience related to experimental tasks. The segments used were

- Interest/Enjoyment

- Perceived Competence

- Pressure/Tension

Interest/Enjoyment

- I enjoyed doing this activity very much

- This activity was fun to do.

- I thought this was a boring activity.

- This activity did not hold my attention at all.

- I would describe this activity as very interesting.

- I thought this activity was quite enjoyable.

- During this activity, I was thinking about how much I enjoyed it.

Perceived Competence

- I think I am pretty good at this activity.

- I think I did pretty well at this activity, compared to other students.

- After working at this activity for awhile, I felt pretty competent.

- I am satisfied with my performance at this task.

- I was pretty skilled at this activity.

- This was an activity that I couldn’t do very well.

Pressure/Tension

- I did not feel nervous at all while doing this.

- I felt very tense while doing this activity.

- I was very relaxed in doing this activity.

- I was anxious while working on this task.

- I felt pressured while doing this activity.

Analysis & Result

Analysis

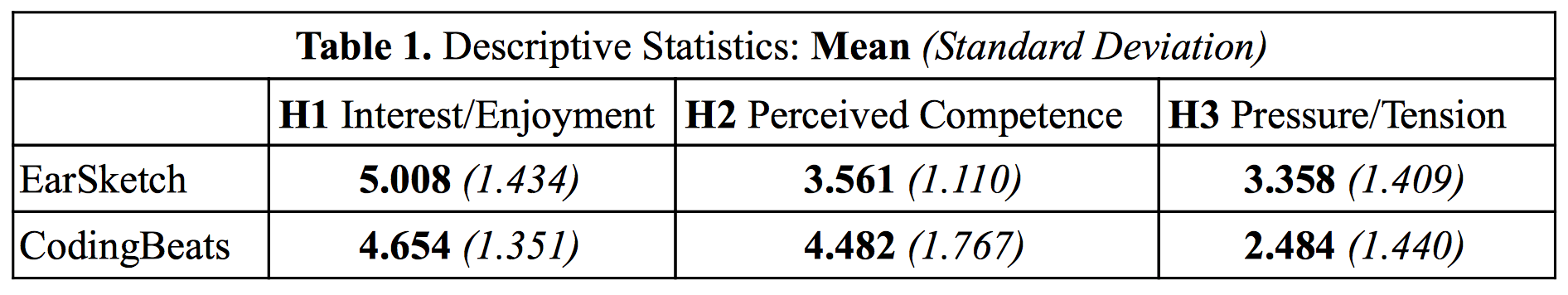

For our first hypothesis, which held that users in the CodingBeats condition would report a higher Interest/Enjoyment subscale score than users in the EarSketch condition, we determined that the scores were normally distributed and therefore conducted a twotailed Student’s ttest (t(35.869) = .7818). We found that p = .4394 (> .05), and therefore failed to reject the null hypothesis, which holds that there is no significant difference in the Interest/Enjoyment subscale scores between CodingBeats and EarSketch users.

Our second hypothesis, held that CodingBeats users would report higher Perceived Competence subscale scores than EarSketch users. Again, scores were normally distributed, and a Student’s ttest found that t(30.2898) = .1.924, p = .0638 (> .05). We therefore fail to reject the null hypothesis, which holds that there is no significant difference in the Perceived Competence subscale scores between the groups. However, because p was close to .05 and the difference in means is relatively large given the groups’ standard deviations, we still consider this difference in our discussion.

Our third hypothesis, that EarSketch users would report higher Pressure/Tension subscale scores than CodingBeats users. These scores were not normally distributed, so a Wilcoxon SignedRanks test was conducted, finding Z = 1.730, p = .0837 (> .05). Thus we failed to reject the null hypothesis (that the two groups’ Pressure/Tension subscale scores were not significantly different), but again, noted the size of the difference given its proximity to significance.

Result

We failed to reject the primary and secondary hypothesis. This indicates that our product perfomed just as well as the competitor for the tasks we conducted. We plan on improving CodingBeats using the feedback we obtained during this project.

No difference in Motivation (Interest/Enjoyment): CodingBeats’ short learning curve vs EarSketch’s many options - a draw

Greater (non-significant) Perceived Competence in CodingBeats: may support our simple, visual, intuitive interface as approachable for beginners

Greater (non-significant) Pressure/Tension in EarSketch: may support our reduced complexity and ample feedback as reducing anxiety when learning the app

We have learnt that when users use an interface akin to this application, it is normally in an exploratory fashion and with limited feedback they lose interest quickly.